The Importance of High-Quality Data

In the modern business landscape, data is often considered the lifeblood of an organization. Industries like banking, insurance, retail, and telecommunications heavily rely on data to drive performance and gain a competitive edge.

The Cost of Poor Data

Imagine you're an archer, and your data is the bow and arrow. If the bow is warped or the arrow is bent, you're less likely to hit the target. Similarly, inaccurate data can severely impact a business, leading to consequences like reduced sales and increased operational inefficiencies.

The Solution: Data Cleansing

To prevent falling into the pitfalls of poor data quality, companies must prioritize data cleansing. Data quality issues aren't confined to a specific system or department; they can infiltrate every corner of an organization. Therefore, a variety of data cleansing approaches are necessary to mitigate these issues.

Why Should You Care?

Operational Efficiency: Clean data helps streamline operations and reduces the time and effort spent correcting errors.

Enhanced Profits: Accurate data can lead to better decision-making, which in turn can increase profitability.

Customer Satisfaction: High-quality data can improve customer targeting and engagement, thereby increasing satisfaction and loyalty.

Understanding Data Cleaning: The Filter of Quality

Imagine data cleaning as a high-quality filter for your morning coffee. You wouldn't want stray coffee grounds or impurities in your cup, would you? Similarly, data cleaning acts as a filter that removes any "impurities" from your dataset, ensuring you're working with the most accurate and useful information.

The Risks of Merging Data

When collecting data from multiple sources, it's like pouring different brands of coffee beans into a single grinder. There's a risk that inconsistencies and errors will arise during the merging process, corrupting the final brew—or in this case, your dataset.

What Does Data Cleaning Involve?

Data cleaning involves identifying and rectifying errors and inconsistencies in data to improve its quality. This includes:

- Removing incorrect or corrupted data

- Standardizing improperly formatted data

- Eliminating duplicate records

- Filling in incomplete data

Why No One-Size-Fits-All?

Data is as diverse as coffee beans—what works for one type may not work for another. Therefore, data cleaning methods will differ based on the specifics of each dataset. However, it's essential to establish a reliable template or protocol for the data cleaning process. Think of it as your "coffee brewing guide," ensuring you make the perfect cup each time you go through the process.

Key Takeaways

- Versatile Nature: Data cleaning methods should adapt to the unique characteristics of each dataset.

- Template for Consistency: Establish a reliable data cleaning process to ensure you're doing it correctly every time.

The Data Cleaning Cycle: A Step-by-Step Guide

Data cleaning is a dynamic and essential process in data analysis. While the specifics may vary depending on your dataset, there are core steps that provide a strong foundation for any data cleaning task. Let's dive into each step to understand its purpose and importance.

1. Eliminate Duplicates and Irrelevant Observations

The first step is akin to decluttering a room: you remove items that are either redundant or don't serve a purpose.

Duplicate Observations: Often arise during data collection and can skew results if not removed.

Irrelevant Observations: These are the data points that don't align with the questions you're aiming to answer. They can be safely discarded without affecting the integrity of your analysis.

Redundant Observations: These are data points that repeat and can distort the quality of your results.

2. Rectify Structural Errors

The next step is to fix the "skeleton" of your dataset. Structural errors can mess up the architecture, making the data unreliable.

Typos and Naming Inconsistencies: Check for misspelled feature names or attributes that are labeled differently but mean the same thing.

Mislabeling Classes: Ensure that classes that should be grouped together are not separated due to errors like inconsistent capitalization.

3. Manage Outliers

Think of outliers as the eccentric artists in a community of data points; they might be unique, but they can distort the overall picture.

Identifying Outliers: Use statistical methods to detect data points that significantly deviate from the rest of the dataset.

Removal or Retention: Decide whether the outliers are genuinely anomalous or if they provide valuable insights.

4. Address Missing Data

Handling missing data is a delicate operation and needs to be approached with caution.

Dropping Observations: The straightforward approach is to remove data points that have missing values. However, this might lead to the loss of valuable information.

Imputation: Another method is to fill in the gaps using data from other observations. This can be a more nuanced approach but must be done carefully.

Flagging: Sometimes, the fact that data is missing can be informative. In such cases, flagging the missing values can help your analysis algorithm take this into account.

Post-Cleaning Reflections: Questions to Ask

After you've gone through the meticulous process of cleaning your data, it's time for some reflection. Think of this as a post-game analysis where you evaluate your performance and strategize for the next round. Here are some pivotal questions to consider:

1. Data Sensibility Check

Ask yourself, does the cleaned data make logical sense? This is similar to proofreading a document. Ensure that the data aligns with the known facts and constraints related to the subject matter.

2. Field-Specific Rules

Does the data adhere to the rules or standards specific to its field? For example, if you're working with financial data, check if the fiscal parameters like revenue, costs, and margins fall within industry norms.

3. Hypothesis Evaluation

Does the cleaned data support, refute, or bring new insights to your initial hypothesis? This is the moment of truth where you see if your hypothesis stands or needs revising.

4. Pattern Discovery

Can you identify any trends or patterns that could inform your next hypothesis or research question? Like connecting the dots in a puzzle, look for relationships that can guide your next steps.

5. Data Quality Assessment

Are there any lingering issues that could be attributed to poor data quality? Be honest in this evaluation. If you identify problems, you may need to revisit some steps in your data cleaning process.

The post-cleaning phase is an opportunity for introspection and analysis. By carefully considering these questions, you not only validate the quality of your cleaned data but also set the stage for meaningful analysis and future research.

In this code snippet, we use the a library to perform some basic data cleaning tasks:

Remove Invalid Rows: We filter out rows where the 'Age' is 'Unknown' or where the 'Gender' is missing (None).

Standardize Country Names: We convert all country names to uppercase for consistency.

1# Original DataFrame

2data = {

3 'Name': ['Alice', 'Bob', 'Cindy', 'David'],

4 'Age': [25, 30, 35, 'Unknown'],

5 'Country': ['US', 'UK', 'Canada', 'US'],

6 'Gender': ['F', 'M', 'F', None]

7}

8df = pd.DataFrame(data)

9print("Original DataFrame:")

10print(df)

11

12# Data Cleaning Steps

13# Step 1: Remove rows with missing or 'Unknown' values

14df_cleaned = df[df['Age'] != 'Unknown']

15df_cleaned = df_cleaned.dropna(subset=['Gender'])

16

17# Step 2: Standardize the Country names to uppercase

18df_cleaned['Country'] = df_cleaned['Country'].str.upper()

19

20# Cleaned DataFrame

21print("\nCleaned DataFrame:")

22print(df_cleaned)The result is a cleaned DataFrame ready for further analysis.

Let's test your knowledge. Click the correct answer from the options.

What are the steps of the data cleaning process?

Click the option that best answers the question.

- Removing Duplicate

- Fix Structural Error

- Manage Unwanted outliners

- All Above

Why Do We Clean Data?

Most datasets collected during a study contain “dirty” data that can lead to disappointing or misleading conclusions if utilized. As a result, scientists must ensure that data is well-formatted and free of irrelevant information before using it. Issues that develop as a result of data sparsity and formatting discrepancies are eliminated during data cleaning. Cleaning is done in data analysis not only to make the dataset more appealing to analysts but also to address and prevent problems that may result from "dirty" data.

Data cleansing is critical for businesses since marketing effectiveness and revenue can be affected without it. While the data concerns may not be totally resolved, reducing them to a bare minimum has a substantial impact on efficiency.

Try this exercise. Is this statement true or false?

The data cleaning process increases the accuracy and efficiency of raw data.

Press true if you believe the statement is correct, or false otherwise.

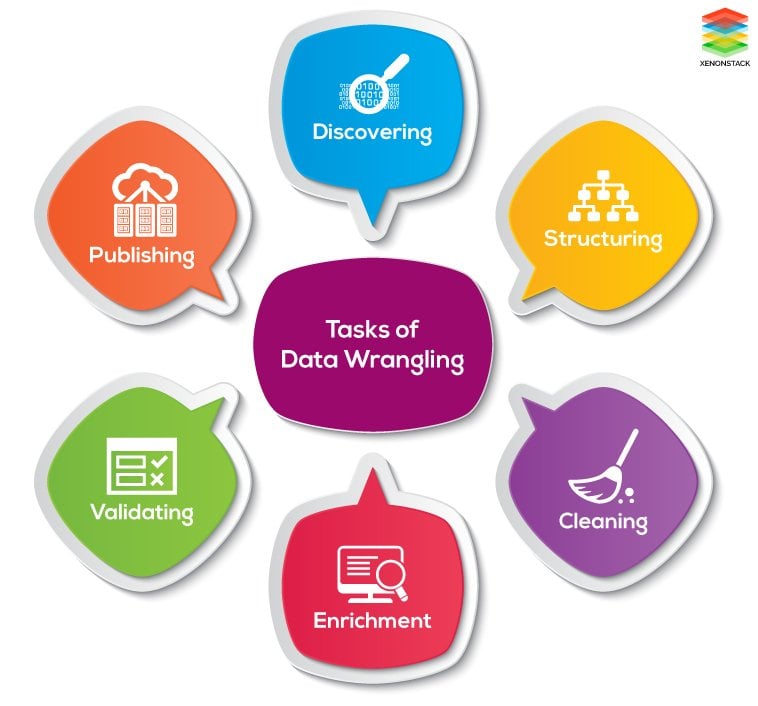

Unraveling Data Wrangling: A Closer Look

Data wrangling, or data preparation, serves as the backstage crew in the theater of data analytics. It ensures that the "actors"—your data—are ready for the spotlight. It's not just about gathering data, but about making it presentable, reliable, and actionable.

What Does Data Wrangling Involve?

Data wrangling is the art of transforming raw data from multiple sources into a format that is easy to analyze. It involves:

Data Collection: Aggregating data from various sources.

Data Cleaning: Removing inconsistencies and errors.

Data Transformation: Converting the data into a format suitable for analysis.

Data Integration: Combining different datasets into a coherent whole.

Why Is It Important?

In our data-driven world, companies generate massive amounts of data. Data wrangling serves as the funnel that channels this influx into manageable, useful information. It's like distilling crude oil into refined petroleum products: each has its specific use and value.

Objectives of Data Wrangling

Let's break down the goals you should aim for when wrangling data:

1. Quick and Accurate Data Delivery

The primary objective is to provide clean, useful data to business analysts without any unnecessary delay. It's about efficiency and effectiveness.

2. Streamline Data Gathering and Organization

Reduce the time and effort required in the data collection phase. The quicker you can organize your data, the sooner you can move to the analysis stage.

3. Focus on Data Analysis

The aim is to allocate more time for data analysis by minimizing the time spent on data preparation. Think of it as prepping the ingredients for a dish so you can spend more time cooking and seasoning.

4. Enable Informed Decision-Making

The ultimate goal is to facilitate better business decisions based on reliable data. It's about turning raw facts into actionable insights.

The Data Wrangling Playbook: A Six-Step Guide

Data wrangling is often dubbed the most crucial and time-consuming phase in data analytics. Think of it as the pre-production stage of a movie where the script is revised, the sets are built, and the cast is prepared for shooting. The six essential steps in the data wrangling process serve as your script for turning raw data into analytical gold.

1. Data Discovery: The Reconnaissance Mission

Data Discovery is your first handshake with your data. In this step, you explore the dataset to understand its structure, contents, and nuances. You're essentially scoping out the "terrain" you will navigate in the subsequent steps.

- Key Actions: Review metadata, conduct basic statistical analyses, visualize sample data.

2. Data Organization/Structuring: The Blueprint Phase

The raw data you collect is usually unstructured and chaotic, like a pile of Lego blocks. Your task is to organize these blocks into meaningful structures.

- Key Actions: Reformat data, arrange columns and rows logically, map data to a suitable model for analysis.

3. Data Cleaning: The Sanitization Process

This step is akin to removing the bad apples from the basket. Here, you correct or remove inaccuracies like outliers and errors to ensure that your data is of high quality.

- Key Actions: Standardize formats, handle missing values, correct inaccuracies, remove duplicates.

4. Data Enriching: Adding the Seasoning

Once you've got the basics right, consider enhancing your dataset for richer analysis. This could involve adding new variables or merging with other datasets for a more comprehensive view.

- Key Actions: Introduce new variables, merge datasets, enrich data with external sources.

5. Data Validating: The Quality Check

This is where you validate the integrity of your data. Think of this as the quality assurance phase in manufacturing, where each product (or data point, in this case) undergoes rigorous testing.

- Key Actions: Apply validation rules, carry out sanity checks, ensure data complies with predefined quality standards.

6. Data Publishing: The Final Act

The cleansed, enriched, and validated data is now ready for the limelight. In this step, you make the data available for downstream analysis, effectively setting the stage for insights to be drawn.

- Key Actions: Store data in accessible formats, document metadata, and ensure data is ready for analysis.

Build your intuition. Is this statement true or false?

Cleaning is one of the steps in the data wrangling process.

Press true if you believe the statement is correct, or false otherwise.

Data Wrangling Tools

Most data analysts spend the majority of their time wrangling data instead of analyzing data. Data wranglers are frequently employed if they possess knowledge of a statistical language such as R or Python as well as knowledge of SQL, Scala, PHP, and other programming languages. Alongside these skills, it's advantageous to know the tools frequently used in data wrangling. Below is a list of such commonly used tools.

Tabula

Tabula is a tool that extracts data from .pdf files. Tabula provides a simple, user-friendly interface for extracting data into a CSV or Microsoft Excel spreadsheet. Tabula is available for Mac, Windows, and Linux.

Talend

Talend is a collection of tools for data wrangling, data preparation, and data cleansing. It's a browser-based platform with a simple point-and-click interface that's ideal for businesses. This simplifies data manipulation far more than it would be with heavy code-based programmers.

Parsehub

If you're new to Python or are having trouble with it, Parsehub is a good place to start. Parsehub is an online scraping and data extraction tool that has a user-friendly desktop interface for extracting data from a variety of interactive websites. You can simply click on the data you want to collect and extract it into JSON format, an Excel spreadsheet, or API forms without having to use any code. The fact that Parsehub includes a graphical user interface is its key selling point for newcomers.

Scrapy

Scrapy is a popular web scraping tool that is more complicated than code-free alternatives like Parsehub, but Scrapy is much more versatile. It's a Python-based open-source web scraping framework that's completely free to use. Scrapy is lightweight and scalable which makes it ideal for a wide range of projects.

Benefits of Data Wrangling

Data wrangling increases the usability of your data by transforming it into a format that is compatible with your end system. As a result, data wrangling provides the following benefits:

Allows data to flow fast. You can easily schedule/automate data flow activities.

Combine various forms of information and their sources (databases, web services, files, etc.)

Allows people to exchange data flow techniques with huge amounts of data quickly and easily.

Let's test your knowledge. Click the correct answer from the options.

Which of the following is a benefit of data wrangling?

Click the option that best answers the question.

- Combines data collected from various sources

- Increases the usability of data

- Both A and B

- Neither A nor B

Unveiling the Magic of Data Cleaning: A Netflix Case Study

In the vast world of data analysis, real-life examples often serve as the best teachers. Imagine you're a data analyst at Netflix, and your mission is to identify the world's most-watched movie between 6:00 pm and 10:00 pm. Sounds straightforward, right? But there's a catch: you're dealing with data files from different countries, each with its own date and time format. Let's break down how data cleaning comes into play here.

The Challenge: A Tower of Babel

You have a multitude of data files coming in from different corners of the world. Each country has its own unique way of representing date and time. Imagine trying to compare apples, oranges, and grapes when all you want is to find the most popular fruit. That's what you're up against.

Step 1: Establishing Uniformity

Your first task is to create a level playing field. You need to convert all these different date-time formats into a single, unified format. This is the data cleaning stage.

- Key Actions: Identify the unique date-time formats, convert them into a common standard, ensure accuracy.

Step 2: The Final Analysis

Once the data is cleaned and standardized, you're ready for the main event. Now it's relatively straightforward to sift through the data to identify the most-watched movie during the specified time slot.

- Key Actions: Apply filters to the cleaned data, perform the necessary computations, and identify the movie that meets the criteria.

In this Netflix example, the process of converting varied data formats into a common one serves as a textbook case of data cleaning. Without this crucial step, the analysis would have been chaotic, time-consuming, and prone to errors.

Data cleaning is not merely a preparatory step; it's the linchpin that holds your analysis together. It turns a jumbled puzzle into a clear picture, enabling data analysts to derive meaningful insights efficiently.

Wrapping Up: The Power of Clean and Organized Data

In today's class, we journeyed through the essential processes of data cleaning and data wrangling. These aren't mere housekeeping chores; they're pivotal steps that set the stage for effective data analysis.

The Key Takeaways

Data Cleaning: Think of this as the grooming stage, where you remove the "noise" to reveal the true "signal" in your data. The process helps you deal with issues like inconsistencies, errors, and redundancies.

Data Wrangling: This is the preparatory phase where you gather, structure, and enrich your data, making it ready for in-depth analysis. It's like setting up the chessboard before diving into the game.

Benefits: Both these processes help you unearth the most relevant insights and make your data analysis faster and more accurate.

In this code snippet, we perform data wrangling tasks using two sample DataFrames:

Data Discovery: Use the

info()method to get basic information about the DataFrame.Data Organization: Sort the DataFrame based on the 'Age' column.

Data Enriching: Merge two DataFrames based on the 'ID' column.

Data Validating: Drop rows that have any missing values to ensure data integrity.

Data Publishing: Save the final, cleaned DataFrame to a CSV file.

1# Original DataFrames

2data1 = {

3 'ID': [1, 2, 3, 4],

4 'Name': ['Alice', 'Bob', 'Cindy', 'David'],

5 'Age': [25, 30, 35, 40]

6}

7data2 = {

8 'ID': [3, 4, 5, 6],

9 'Score': [85, 90, 88, 76],

10 'Country': ['Canada', 'US', 'UK', 'Australia']

11}

12df1 = pd.DataFrame(data1)

13df2 = pd.DataFrame(data2)

14print("Original DataFrame 1:")

15print(df1)

16print("\nOriginal DataFrame 2:")

17print(df2)

18

19# Data Wrangling Steps

20# Step 1: Data Discovery - Get basic info

21print("\nDataFrame 1 Info:")

22print(df1.info())

23

24# Step 2: Data Organization - Sort by Age

25df1_sorted = df1.sort_values(by='Age')

26

27# Step 4: Data Enriching - Merge DataFrames

28df_merged = pd.merge(df1_sorted, df2, on='ID', how='outer')

29

30# Step 5: Data Validating - Drop rows with missing values

31df_validated = df_merged.dropna()

32

33# Step 6: Data Publishing - Save to CSV

34df_validated.to_csv('cleaned_and_enriched_data.csv', index=False)

35

36# Final DataFrame

37print("\nFinal DataFrame:")

38print(df_validated)The result is a DataFrame that is ready for data analysis, having been cleaned, enriched, and validated.

One Pager Cheat Sheet

- Accurate

datais critical for businesses wanting to maximize efficiency and profits, so a range of data cleaning techniques can be used to prevent any issues arising. - Data cleaning is the process of removing incorrect, corrupted, improperly formatted, duplicate, and incomplete data from collected datasets, and is necessary to ensure a successful data analysis process.

- The

data analystmust analyze the cleaned data to answer questions and spot patterns that may be used to develop the next hypothesis. - The data cleaning process includes

Data Preprocessing,Data Transformation,Data ValidationandData Analysisto ensure accuracy and uncover insights. - Data cleaning is important to ensure that datasets used for data analysis are free of irrelevant and incorrect information,

maximizingtheir efficiency and effectiveness in order toavoidobtaining disappointing or misleading results. - The data cleaning process removes irrelevant and redundant information, reducing the

computational complexityof the analysis and increasing its accuracy and efficiency. - Data wrangling is the process of combining data from multiple sources and cleaning it so that it can be easily accessed and analyzed, and is essential in producing useful data to

business analystsin a timely manner to make better decisions. - Data wrangling is a time-consuming process that generally involves

data discovery,structuring,cleaning,enriching,validatingandpublishing, in order to prepare data for analysis. - Yes, cleaning is an essential part of the

data wranglingprocess to remove any inaccuracies and ensure data accuracy. - Data wranglers need to possess knowledge of statistical languages such as

RorPythonas well as tools likeTabula,Talend,Parsehub, andScrapyfor data wrangling, data preparation, and data cleansing. - Data wrangling

automates data flowand combines various data sources toexchange data quicklyandincrease usability, resulting in cost and time savings. - The

technical termof data wrangling does not involve the speedy exchange of data or the ability to quickly exchange techniques with large amounts of data asbenefits, rather it involves the ability to automatically schedule data flow activities and combining information from different sources. - By converting the different data formats into a common format, data cleaning ensures that a data analyst can accurately

identifythe name of the most-watched movie between 6:00 pm and 10:00 pm. - The main takeaway from this lesson is that

data cleaninganddata wranglingcan significantly reduce the amount of time spent on data analysis and help identify the most important information.